Manage laravel .env file using AWS parameter store

When you have an application hosted on AWS EC2 instances which runs on an environment file, like laravel framework needs .env environment file, it is always a pain to manage environment variables.

Specially when you have multiple EC2 instances running for production on an auto-scaling setup. Please note that these are not host OS environment variable, but the application framework's environment variables in the respective env file.

You may alternatively call them application configuration files. You mostly do not add them in git or bitbucket due to the possibility of them containing sensitive information, e.g. database passwords, AWS access credentials etc..

Problem statement :

- You have environment .env file inside your EC2 AMI

- When you spin up an instance, the .env file comes from the AMI specified in the launch configuration, considering you have auto-scaling set up

- If you want to update any existing .env variable, you need to spin up new AMI and update production servers

If your production environment has a setup like above, you may have experienced bit of pain in changing .env variables. Specially when there is a quick turnaround required due to some urgency or bug where you need to update an .env file variable asap.

EC2 user_data coming to rescue :

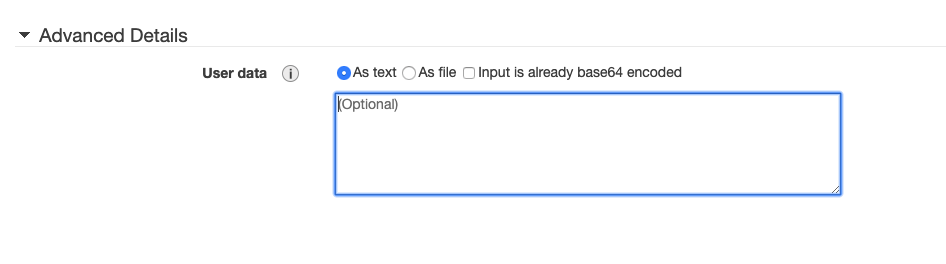

When you spin up a new instance, you have an option in Step 3: Configure Instance Details screen inside Advanced Details called user_data. This looks something like below :

You can write shell scripts in text area or upload a bash file as per your choice. When you launch an instance in Amazon EC2, these shell scripts will be run after the instance starts. You can also pass cloud-init directives but we will stick to shell script for this use case to fetch the environment variables from systems manager parameter store and generate an environment file, in our case .env file under laravel root.

Setting up variables in parameter store :

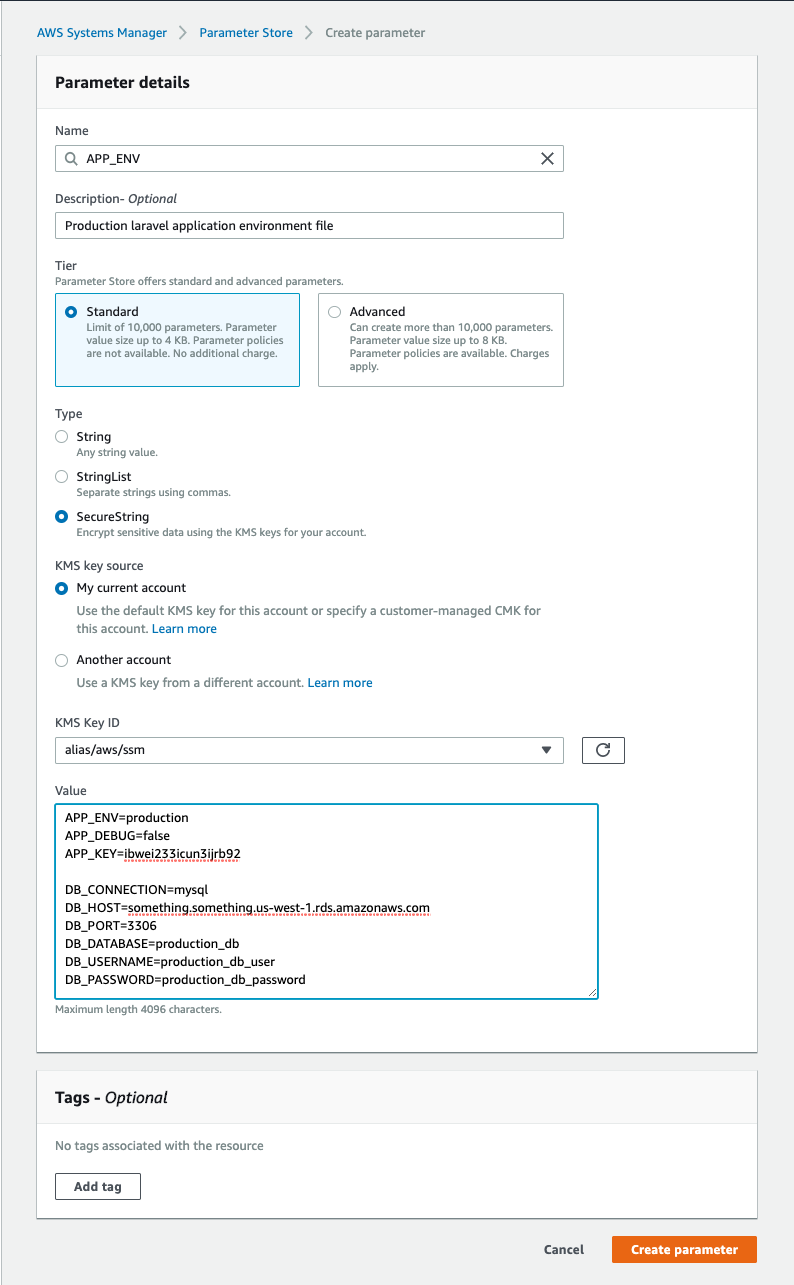

AWS has service called Systems Manager which contains a sub-service for resource sharing. This is called Parameter Store. There are couple of ways to store a parameter inside this service. We will be using SecureString option which makes sure the paramater value(which in our case is the enviromnent file contents) are encrypted inside AWS.

You may think that instead of storing entire .env file content into a single text-area, why should we not create multiple paremeters for each variable in the .env file? That's a perfectly valid point. However, storing it in one paramter store decreases overall maintenability and also makes the shell script to retrive those values very compact.

You can use below steps to store your .env file inside parameter store :

- Login to AWS console and switch to the region which contains your production setup

- Go to

Systems Managerand click onParameter Store - Click on

Create Parameter - Add

NameandDescription - Select

TierasStandard - Select

TypeasSecureString - Select KMS key which managed encryption as per your choice

- Enter entire

.envcontents into the text-area - Click on

Create Parameterto save the parameter

Accessing the paremeter store values :

Your EC2 instance which runs the application will be accessing these parameter store values. So you need to make sure your instance IAM role has following permission in its policy.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "VisualEditor1",

"Effect": "Allow",

"Action": [

"ssm:GetParameters",

"ssm:GetParameter",

],

"Resource": [

"arn:aws:ssm:*:*:parameter/*"

]

}

]

}

Shell script to generate .env :

We will be using following shell script to generate .env :

#!/bin/bash

# Please update below varoables as per your production setup

PARAMATER="APP_ENV"

REGION="us-west-1"

WEB_DIR="/var/www/html"

WEB_USER="www-data"

# Get parameters and put it into .env file inside application root

aws ssm get-parameter --with-decryption --name $PARAMATER --region $REGION | jq '.Parameter.Value' > $WEB_DIR/.env

# Clear laravel configuration cache

cd $WEB_DIR

chown $WEB_USER. .env

sudo -u $WEB_USER php artisan config:clear

Putting pieces together :

Let us now consider a very generic overview of how these pieces will fit together :

- You will have your

.envfile stored in AWS systems manager parameter store with encryption enabled at rest - When a new instance will spin up, it will pull the env string and put into an

.envfile. This will happen usinguser_datasetting - It will clear the application env cache or any dependent commands to take new environmental file into effect

Fundamental implementation situations :

Let's say you have added a new variable in your env paramater group. You expect it to reflect ASAP in following situations :

- All the new EC2 instances which will spin up after that point should always use the new env file variables :

This can be easily implemented as we discussed earlier using user_data. When you spin up an instance manually you can add the above shell script to pull latest .env from parameter group in the user_data field.

If you have auto-scaling enabled, make sure your launch configuration or launch template has the user_data field set with the shell script we discussed in the previous section. This will then make sure, whenever a new EC2 instance will spin up, it will always fetch the environmental paramater first from AWS systems manager's parameter group store and then make a branch new .env file.

- The existing instances which are already running should fetch the updated env file variables :

Let's first take out the case where you have instance not in any auto-scaling group. You can then manually SSH into instance and fetch the new .env. (Or automate it using Solution B)

But in here main point of concern is when you have autoscaling setup and production is running on multiple instances. This is an area where we can have 2 solutions. First one is very easy to implement whereas second one can be a pain. Let's discuss both :

Solution A :

From your existing auto-scaling instances, start terminating an instance one by one. Your auto-scaling setup will then spin up new instances. As we discussed earlier, considering your launch configuration already has the shell script to pull updated environment variables from parameter group at the instance start from user_data setting, the new instances will be ready with updated environmental veriables in their .env files.

To add more automation, you may also do the termination activity using a lambda function or ansible based on a trigger when systems manager's parameter group is updated.

Solution B :

This is important for applications where there ephemeral storage is important for some ongoing tasks. If we terminate the instances, the production application will lose some of it's ongoing process data. In this case we need to find an automated way to run the shell script on all those running instances, to update their .env variables.

In this case, you can use AWS lambda for this purpose. The bottom line of this is When systems manager's parameter group is updated, it will trigger a lambda function. That lambda function will SSH into all your running production instances and update the .env file.

There is already a github repo I have added sometime back on running SSH commands on EC2 instances. You can click here to know more about it.

My two cents :

This may definitely appear as an overhead... But as your application becomes large, setting these things up will make your life so much easier.